Warning: What Can you Do About Deepseek Ai Right Now

페이지 정보

본문

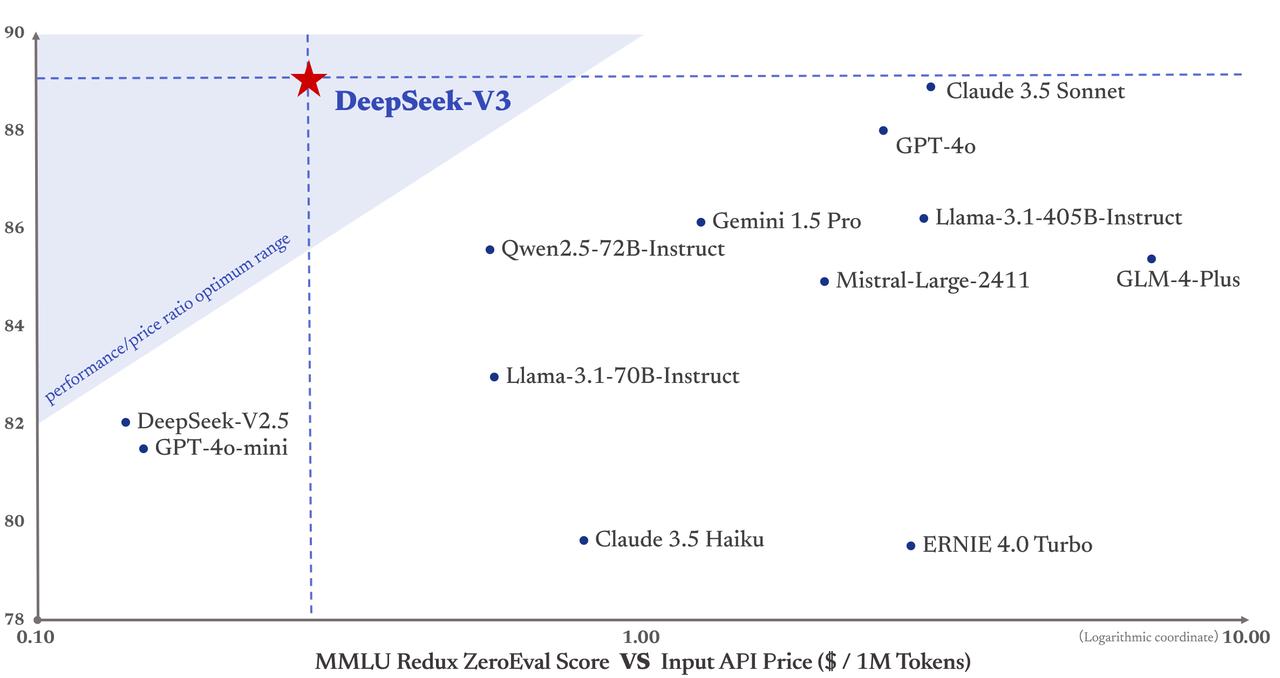

AI corporations spend a lot of money on computing power to prepare AI models, which requires graphics processing items from firms like Nvidia, Sellitto mentioned. This impressive efficiency at a fraction of the cost of other fashions, its semi-open-supply nature, and its training on significantly less graphics processing models (GPUs) has wowed AI specialists and raised the specter of China's AI models surpassing their U.S. This has made reasoning fashions well-liked amongst scientists and engineers who are looking to integrate AI into their work. This makes the preliminary outcomes more erratic and imprecise, however the model itself discovers and develops distinctive reasoning methods to proceed improving. But unlike ChatGPT's o1, DeepSeek is an "open-weight" mannequin that (although its coaching data stays proprietary) enables users to peer inside and modify its algorithm. Now, R1 has also surpassed ChatGPT's latest o1 model in many of the same tests. Plus, DeepSeek is dealing with privateness considerations just like those TikTok has had to deal with for years now, which might drive some customers away. Just as important is its lowered worth for customers - 27 occasions less than o1. But in the event you don’t need as a lot computing energy, like DeepSeek claims, that might lessen your reliance on the company’s chips, hence Nivdia’s declining share value.

That is how you get fashions like GPT-4 Turbo from GPT-4. DeepSeek claims responses from its DeepSeek-R1 model rival other giant language fashions like OpenAI's GPT-4o and o1. Those shocking claims had been a part of what triggered a file-breaking market worth loss for Nvidia in January. On high of that, DeepSeek nonetheless has to show itself within the competitive AI market. In the long term, low-cost open-supply AI continues to be good for tech companies basically, even if it may not be great for the US overall. Get our in-depth evaluations, helpful suggestions, nice deals, and the largest news stories delivered to your inbox. The FTSE one hundred inventory index of the UK's greatest publicly-listed corporations was also regular on Tuesday, closing 0.35% increased. Monday. Chipmaker Nvidia's shares slumped 17%, wiping out $600 billion in market worth, DeepSeek Chat the biggest one-day loss ever for a public firm. Unfortunately for DeepSeek, not everyone within the tech industry shares Huang's optimism. In scarcely reported interviews, Wenfeng stated that DeepSeek aims to build a "moat" - an trade time period for boundaries to competitors - by attracting expertise to remain on the leading edge of model development, with the final word objective of reaching synthetic common intelligence. Cost-Effectiveness - Freemium model obtainable for general use.

Nvidia's quarterly earnings name on February 26 closed out with a query about DeepSeek, the now-infamous AI model that sparked a $593 billion single-day loss for Nvidia. Meta Platforms grew revenue 21% 12 months over yr to $48.39 billion in Q4, based on an earnings statement. Given its meteoric rise, it is not surprising that DeepSeek got here up in Nvidia's earnings name this week, but what is surprising is how CEO Jensen Huang addressed it. Considering the market disruption DeepSeek precipitated, one might count on Huang to bristle at the ChatGPT rival, so it is refreshing to see him sharing praise for what DeepSeek has achieved. It remains to be seen how DeepSeek will fare in the AI arms race, however praise from Nvidia's Jensen Huang is not any small feat. The past few weeks have seen DeepSeek take the world by storm. We've got reviewed contracts written utilizing AI assistance that had multiple AI-induced errors: the AI emitted code that labored effectively for known patterns, but performed poorly on the actual, custom-made situation it wanted to handle.

It's necessary to note that Huang specifically highlighted how DeepSeek may enhance other AI fashions since they'll copy the LLM's homework from its open-supply code. Furthermore, when AI fashions are closed-supply (proprietary), this may facilitate biased systems slipping via the cracks, as was the case for numerous extensively adopted facial recognition systems. This achievement significantly bridges the performance hole between open-source and closed-supply models, setting a brand new standard for what open-source models can accomplish in difficult domains. Although Google’s Transformer architecture presently underpins most LLMs deployed today, as an illustration, emerging approaches for building AI models corresponding to Cartesia’s Structured State Space models or Inception’s diffusion LLMs-both of which originated in U.S. And more critically, can China now bypass U.S. "Through a number of iterations, the model skilled on large-scale artificial information turns into considerably extra powerful than the initially under-educated LLMs, leading to greater-quality theorem-proof pairs," the researchers write. In these three markets: drones, EVs, and LLMs, the key sauce is doing fundamental, architectural analysis with confidence.

It's necessary to note that Huang specifically highlighted how DeepSeek may enhance other AI fashions since they'll copy the LLM's homework from its open-supply code. Furthermore, when AI fashions are closed-supply (proprietary), this may facilitate biased systems slipping via the cracks, as was the case for numerous extensively adopted facial recognition systems. This achievement significantly bridges the performance hole between open-source and closed-supply models, setting a brand new standard for what open-source models can accomplish in difficult domains. Although Google’s Transformer architecture presently underpins most LLMs deployed today, as an illustration, emerging approaches for building AI models corresponding to Cartesia’s Structured State Space models or Inception’s diffusion LLMs-both of which originated in U.S. And more critically, can China now bypass U.S. "Through a number of iterations, the model skilled on large-scale artificial information turns into considerably extra powerful than the initially under-educated LLMs, leading to greater-quality theorem-proof pairs," the researchers write. In these three markets: drones, EVs, and LLMs, the key sauce is doing fundamental, architectural analysis with confidence.

If you have just about any inquiries relating to wherever in addition to the way to work with DeepSeek v3, you'll be able to call us at our page.

- 이전글Tattoo Minimaliste à Montréal : L'Art de la Simplicité 25.03.19

- 다음글페이 25.03.19

댓글목록

등록된 댓글이 없습니다.